Run multiple Jobs on single Server with Daestro

Sep 1, 2025 Batch Jobs Written by Vivek Shukla

Some jobs are not CPU intensive and they do not require a lot of memory, that can leave a lot of wasted resources if we can’t assign them to another Job.

When Daestro started, I didn’t add the feature to run the multiple jobs on a Compute Spawn (server) to get the first beta out as soon as possible, however it was always on the roadmap.

Now we can run multiple Jobs on a Compute Spawn.

Here’s 10,000 feet overview of how it works:

- You can now set CPU Quota and Memory Quota in Job Definition, so that each job is restricted to that specific amount of CPU and memory.

- When a Job is submitted, it may spawn a new Compute instance. If that instance’s total resources are less than the job’s defined quota, the job will use the instance’s full capacity, and no other jobs can run on it until the first one is complete.

- On the other hand if Compute Spawn has more CPU and Memory then the job requires, the job will run using its specified quota, leaving the remaining resources free. Other jobs can then run alongside it, as long as they fit within the available CPU and memory.

Few things to keep in mind:

- If you are setting quota in Job Definition, then both CPU and Memory quota must be set.

- Minimum CPU quota can be 10% (1/10th of a core) and Memory can be 64 MB.

- A job can still run on a Compute Spawn that has fewer resources than the job’s quota, as long as it is the only job running on that instance.

Table of Contents

Setting up CPU and Memory quota in Job Definition

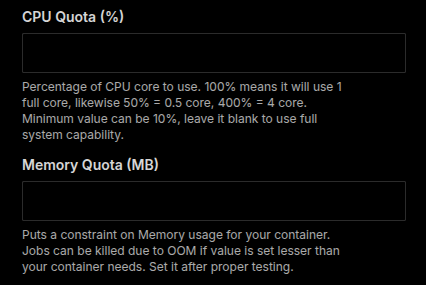

CPU Quota: CPU Quota is percentage of CPU cores to use. 100% means 1 full core. For example, if a Compute Spawn has 8 CPU cores (or vCPUs), it has a total of 800% CPU capacity. To allocate 1.5 cores to your job, you would enter 150% in the CPU Quota field. Minimum value can be set is 10% (0.1 core).

Memory Quota: Memory quota is in MB. Be careful when setting this, if you set this value too low, your job risks being terminated due to an Out Of Memory (OOM) error. Set it after you’ve tested your container requirement.

Limits on Job Run Concurrency

It’s important to understand the limits that are imposed on job concurrency by Daestro, and how you can set your own limits.

Project level limits: There is only project level limit that Daestro puts on Job Run Concurrency based on Plan you are on, you can learn more about it on pricing page.

Job Queue: In Job Queue, you can define your own limits on job run concurrency by setting value to Max Concurrency field. 0 means no limit. The project-level limit always overrides the Job Queue setting. For example, if your plan’s limit is 10 concurrent jobs, you cannot exceed this, even if you set the Job Queue’s Max Concurrency to 20.